Hosting dbt Documentation on Google Cloud Run: A Step-by-Step Guide

For modern data teams, robust documentation is crucial for understanding work processes and ensuring sustainability. dbt (data build tool) addresses this need with its dbt Docs feature, automatically documents your data models. In this article, I’ll walk you through how to host your dbt Docs using Google Cloud Run easily. By leveraging Cloud Run’s flexible and scalable architecture, you can quickly share and make your dbt project documentation accessible to everyone.

Prerequisites

We'll focus on three main steps in this process:

- Integrating Your GitHub Project with Cloud Build: Cloud Build will allow us to process your dbt project from GitHub automatically.

- Converting Your DBT Project into a Docker Image: We'll create a Docker image containing all the necessary operations for documentation.

- Publishing This Image as a Service on Cloud Run: We'll make your dbt Docs accessible by using your Docker image as a Cloud Run service.

Integrating Your GitHub Project with Cloud Build

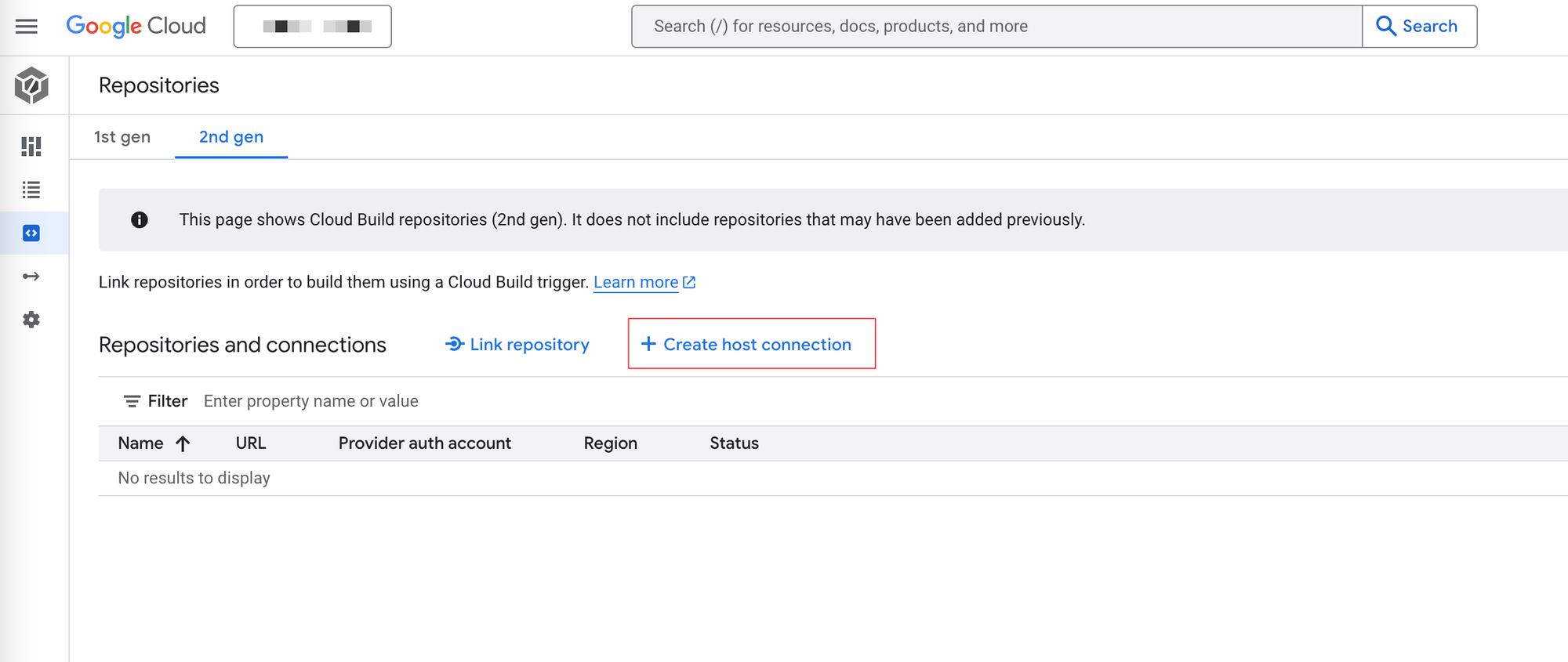

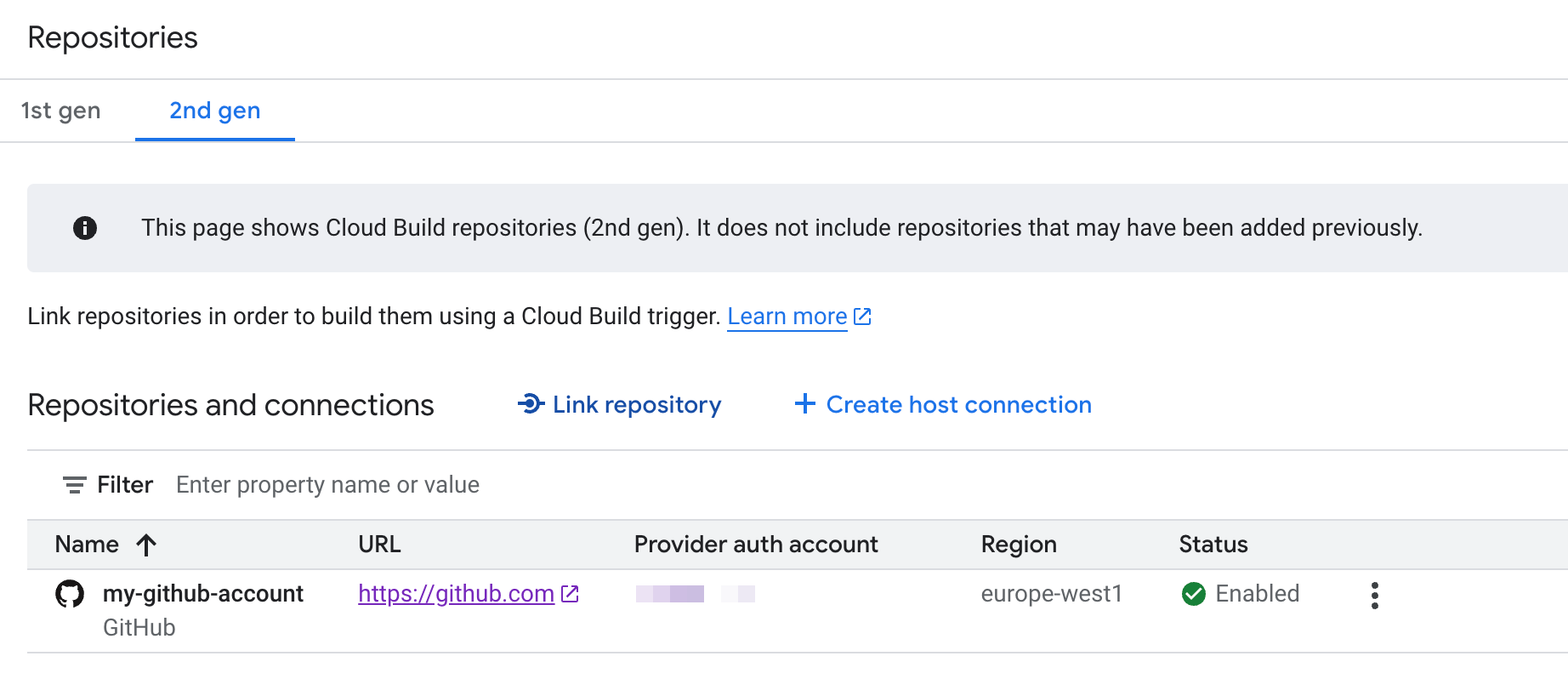

- Access the Google Cloud Console CloudBuild page and click "Create host connection."

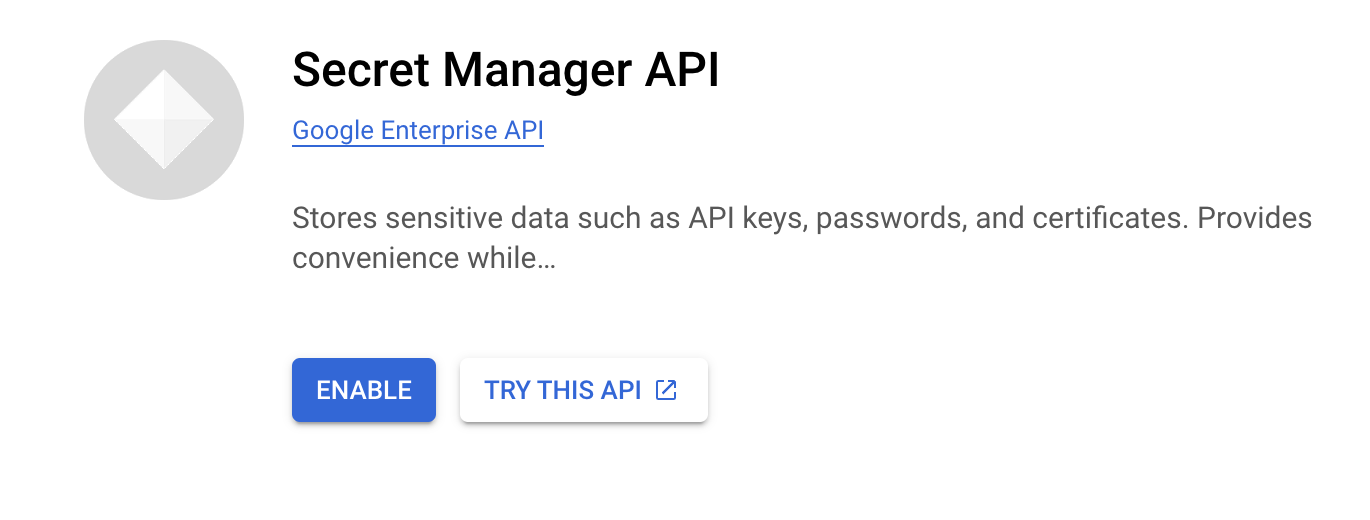

- Enable Secret Manager API

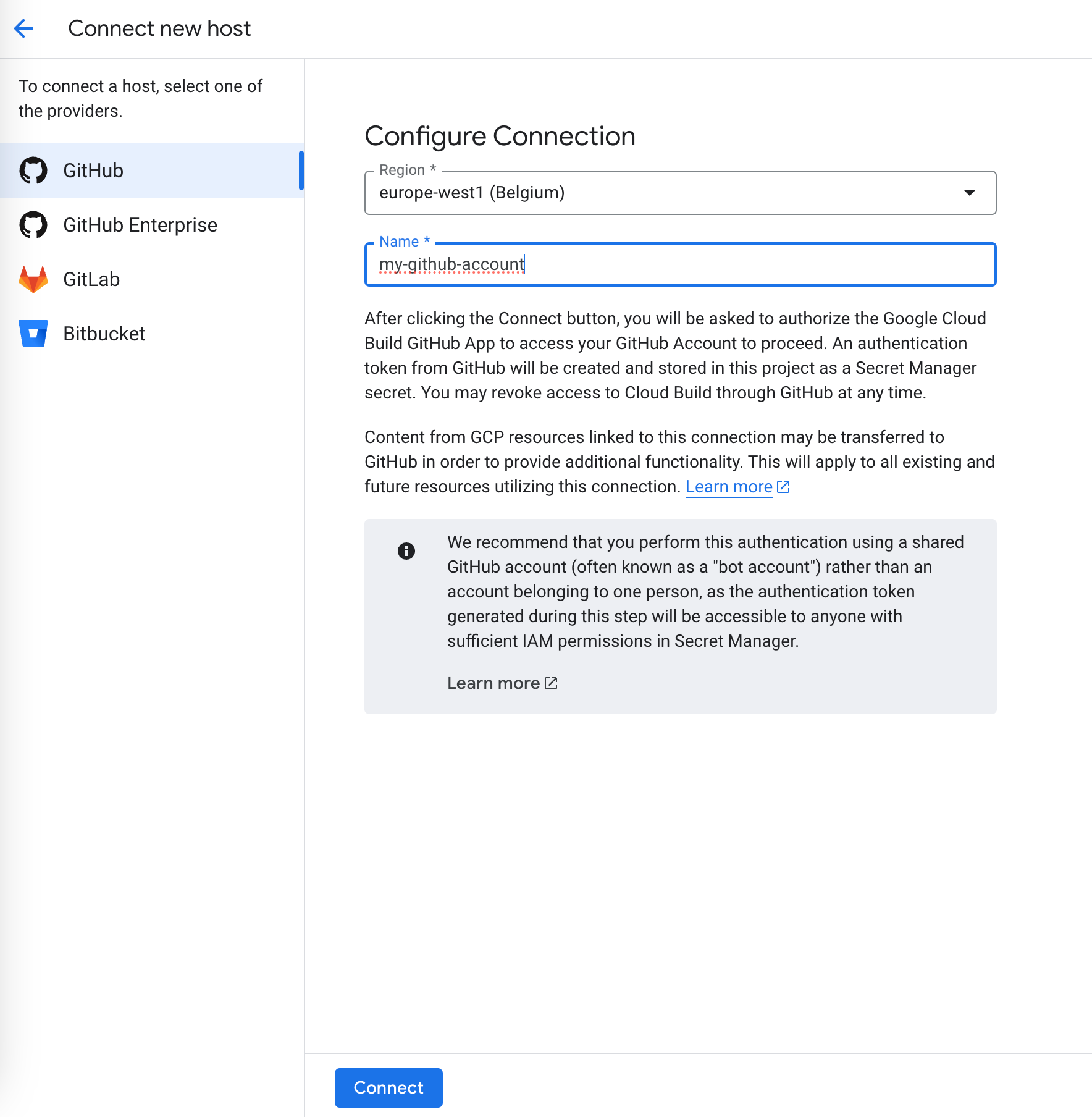

- Fill in your desired region and name your connection.

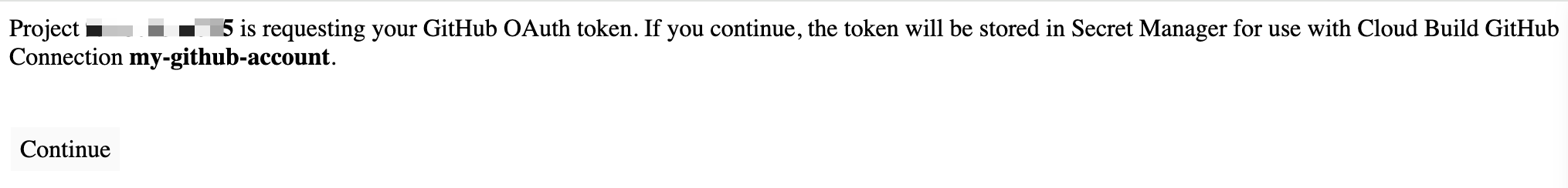

- A pop-up window will open, and Cloud Build will start the authentication process. Click the "Continue" button.

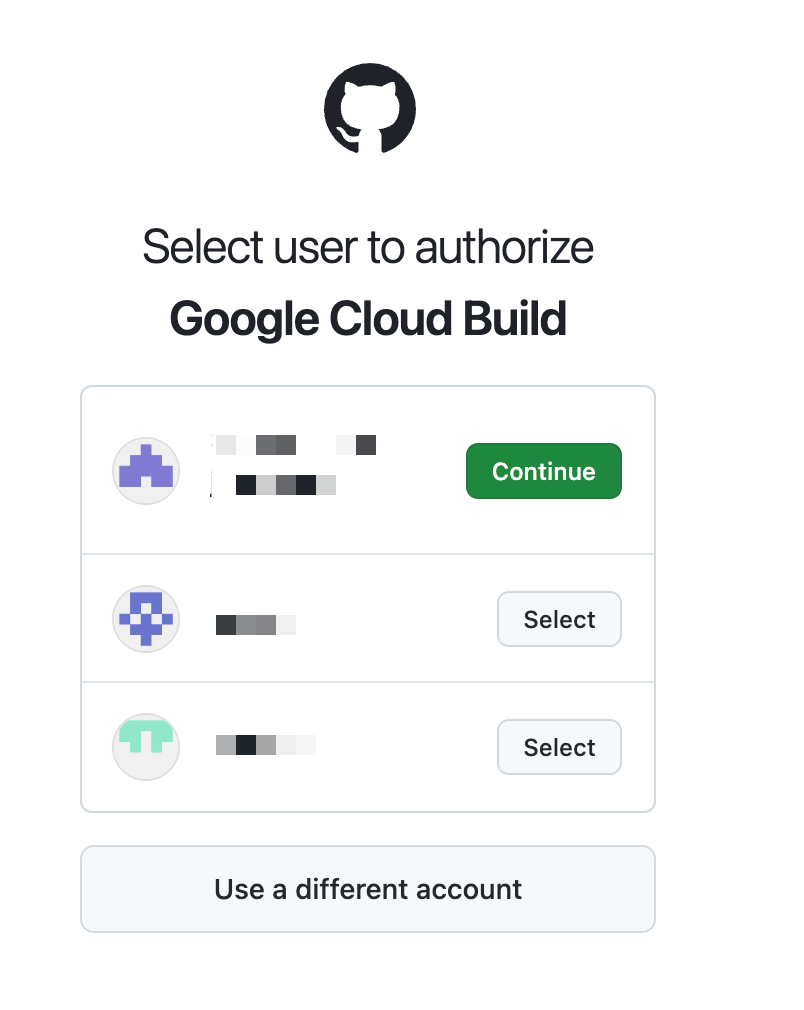

- Choose your GitHub account.

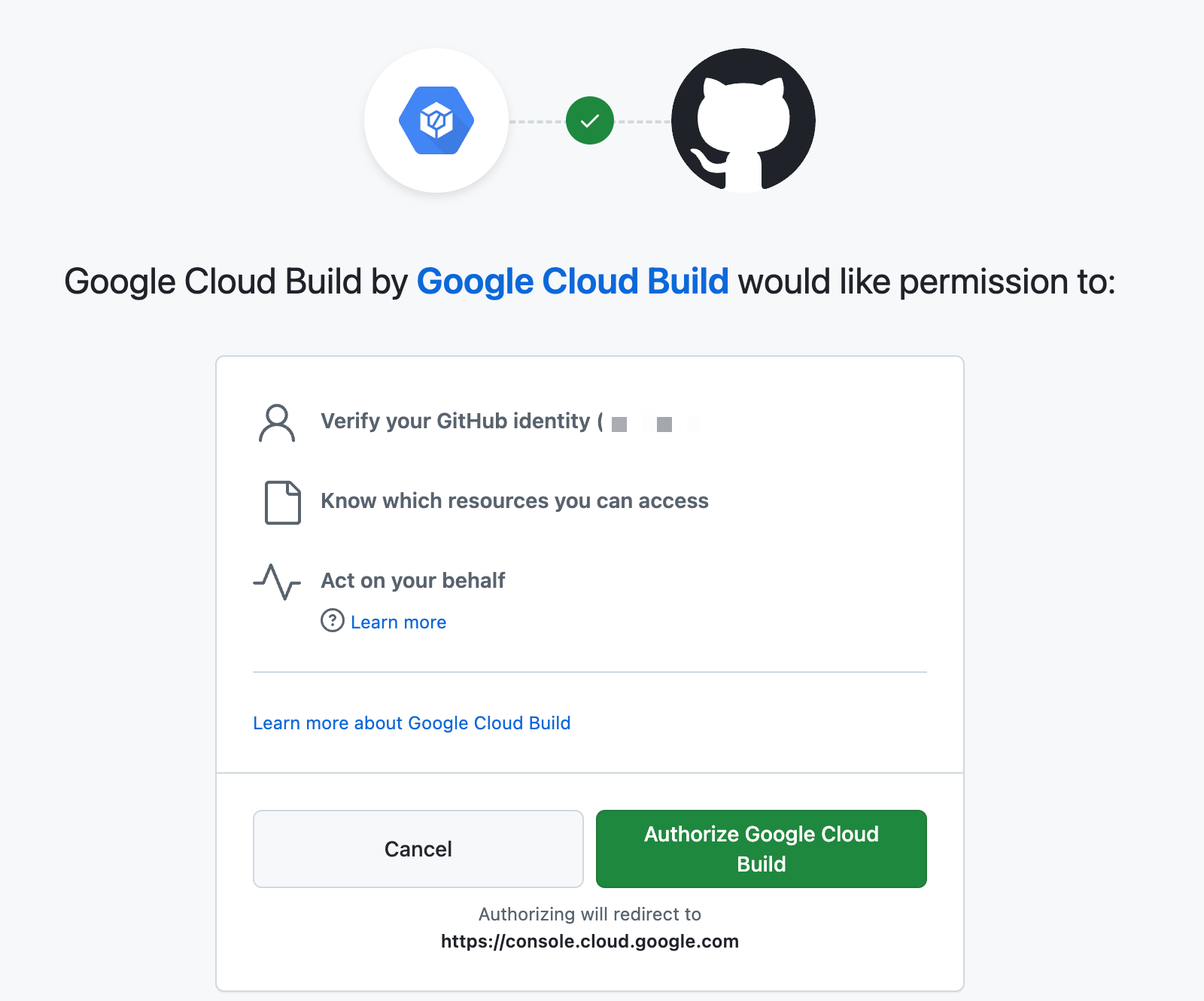

- Authorize Google Cloud Build

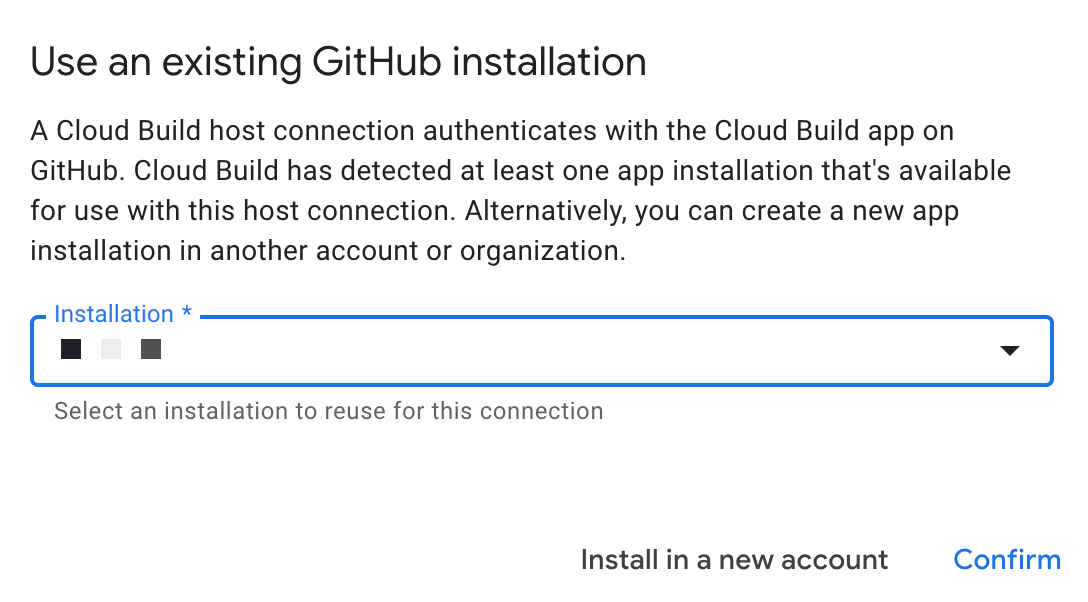

- Choose your Github user and press the "Confirm" button.

- Now, you have successfully added your Github account. Now, we need to link the repository.

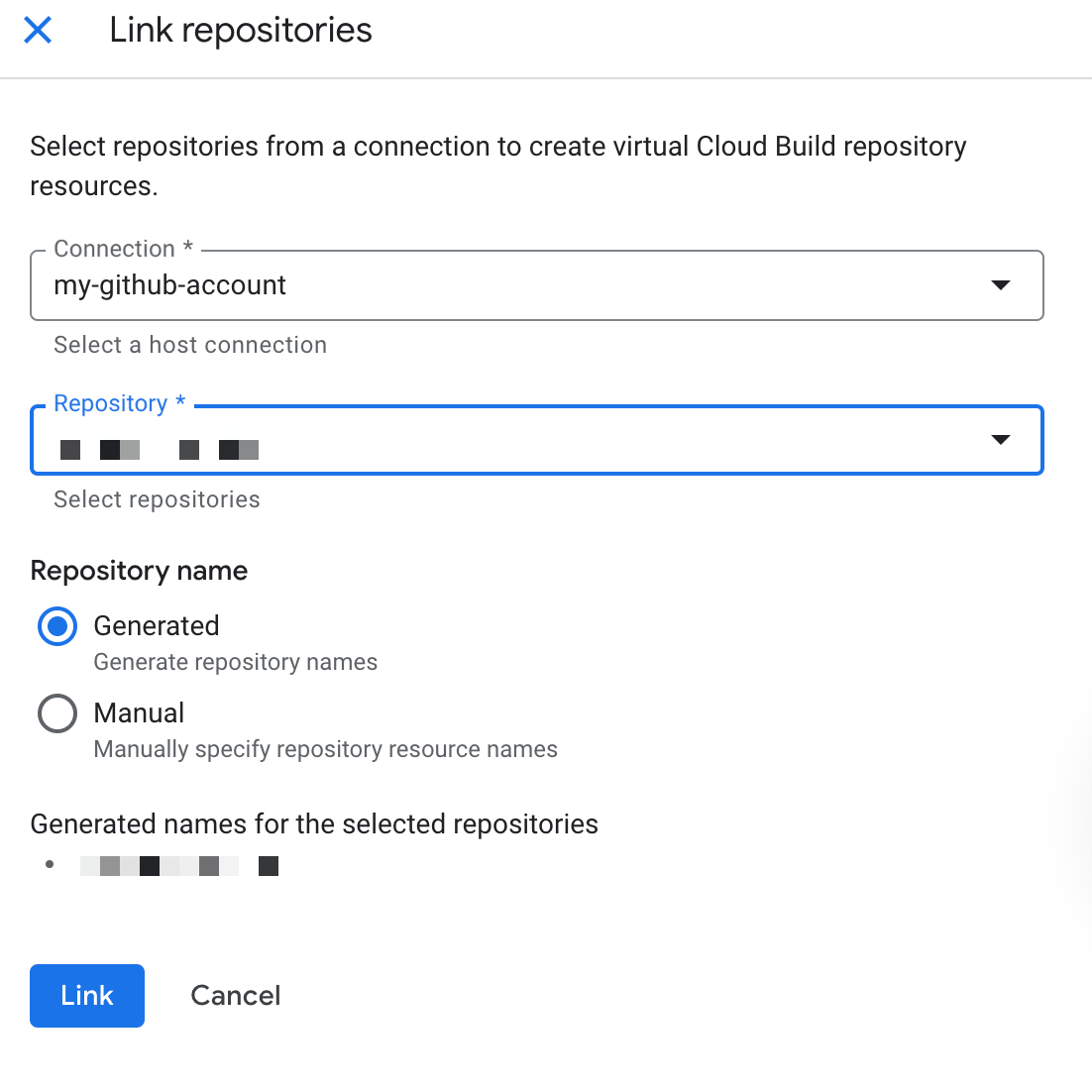

- Select your connection and repository. Then press the "Link" button.

Creating the Docker Image for DBT

The following Dockerfile configures your dbt project to generate documentation:

FROM python:3.11-slim

RUN pip install dbt-core dbt-bigquery

WORKDIR /dbt

# Copy the DBT project

COPY /<githubProjectName>/dbt/<DBTProjectName> .

# Service account JSON file

COPY keys.json .

# Generate manifest file

RUN dbt parse --profiles-dir /dbt

# Generate documentation

RUN dbt docs generate --profiles-dir /dbt --no-compile

# Port to be used in Cloud Run

EXPOSE 8080

# Start the documentation service

CMD ["dbt", "docs", "serve", "--port", "8080", "--host", "0.0.0.0", "--profiles-dir", "/dbt"]Cloud Build Configuration

This dbtdocs-cloudbuild.yaml file builds the Docker image and deploys it as a service on Cloud Run:

serviceAccount: '...iam.gserviceaccount.com'

options:

logging: CLOUD_LOGGING_ONLY

steps:

# Docker image build

- name: 'gcr.io/cloud-builders/docker'

args: ['build', '-t', '<region>-docker.pkg.dev/<project-id>/<repository name>/dbt-docs','-f','<githubProjectName>/dbt/dbtcore.Dockerfile','.']

# Push image to Container Registry

- name: 'gcr.io/cloud-builders/docker'

args: ['push', '<region>-docker.pkg.dev/<project-id>/<repository name>/dbt-docs']

# Update service in Cloud Run

- name: 'gcr.io/google.com/cloudsdktool/cloud-sdk'

entrypoint: gcloud

args:

- 'run'

- 'deploy'

- 'dbt-docs'

- '--image'

- '<region>-docker.pkg.dev/<project-id>/<repository name>/dbt-docs'

- '--platform'

- 'managed'

- '--region'

- '<region>' # or your preferred region

- '--allow-unauthenticated'Creating a Cloud Build Trigger

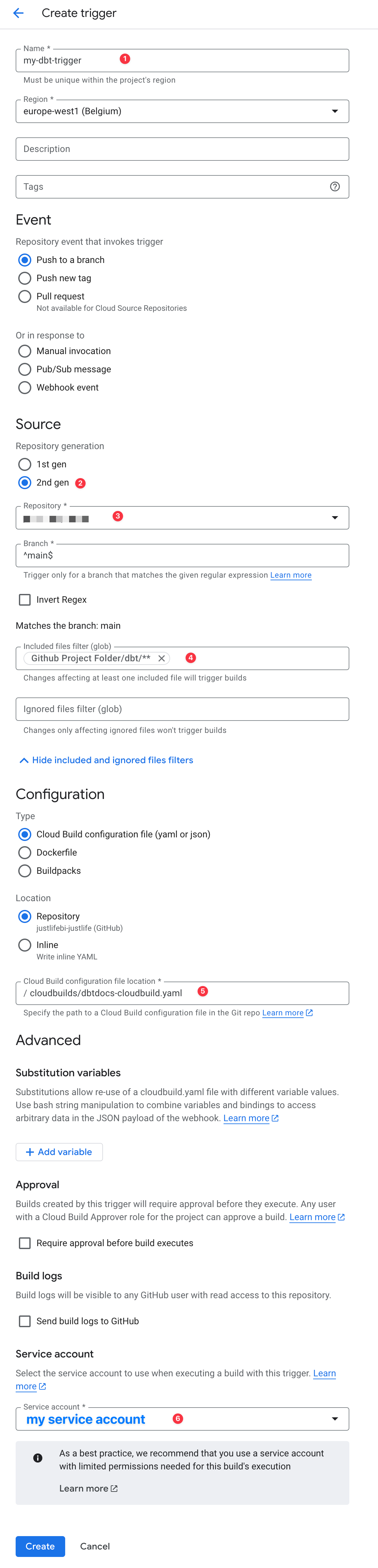

- Define the Trigger: Create a Trigger in Cloud Build and add these details:

- File Filtering: Use a file filter like

Github Project Folder/dbt/** - YAML File Path: Specify the path to

cloudbuilds/dbtdocs-cloudbuild.yaml - Service Account: Select the service account mentioned in the YAML file

- File Filtering: Use a file filter like

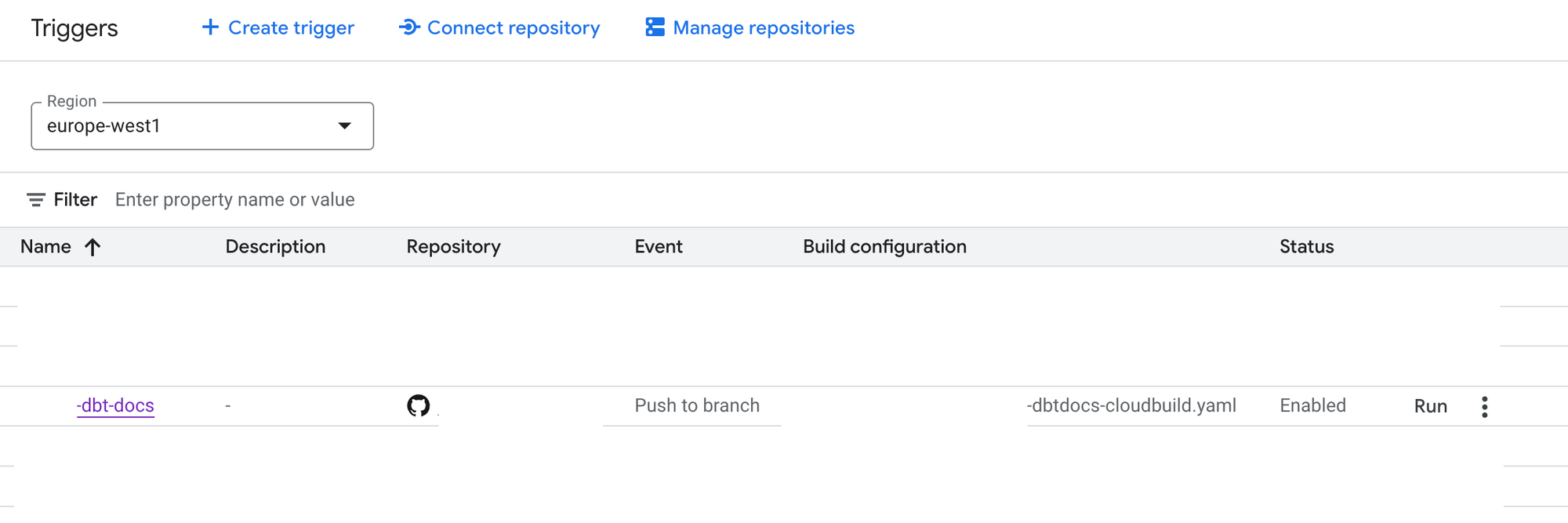

- Run the Trigger: Execute the trigger, observe as it builds the Docker image, and then deploy it as a service on Cloud Run.

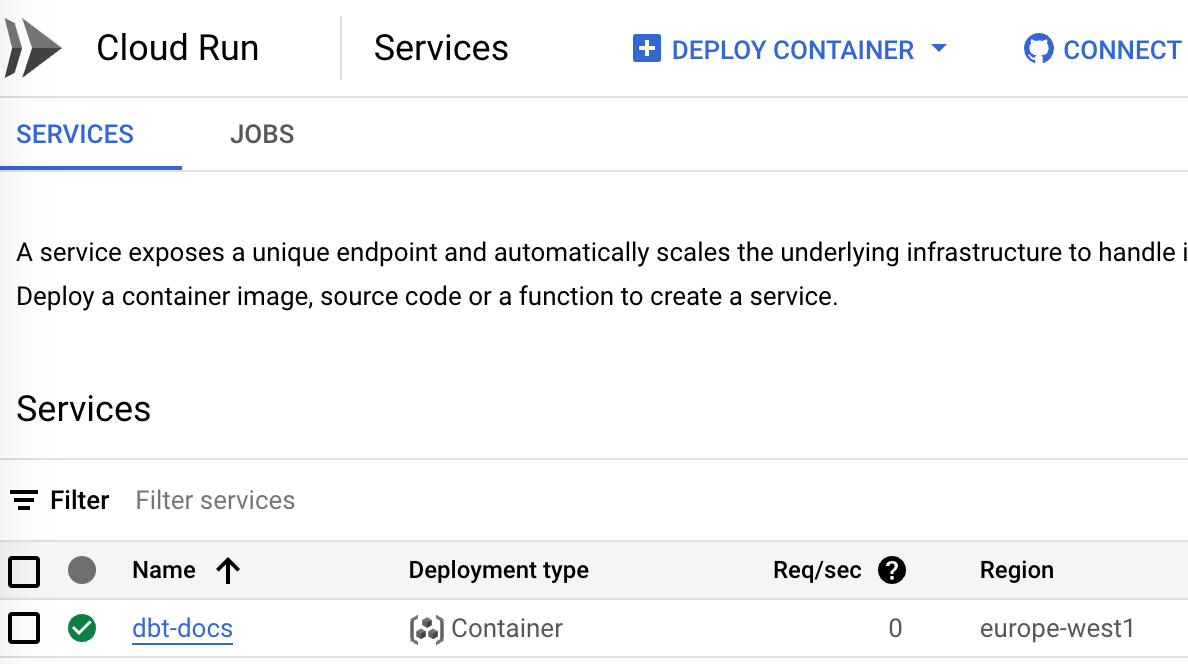

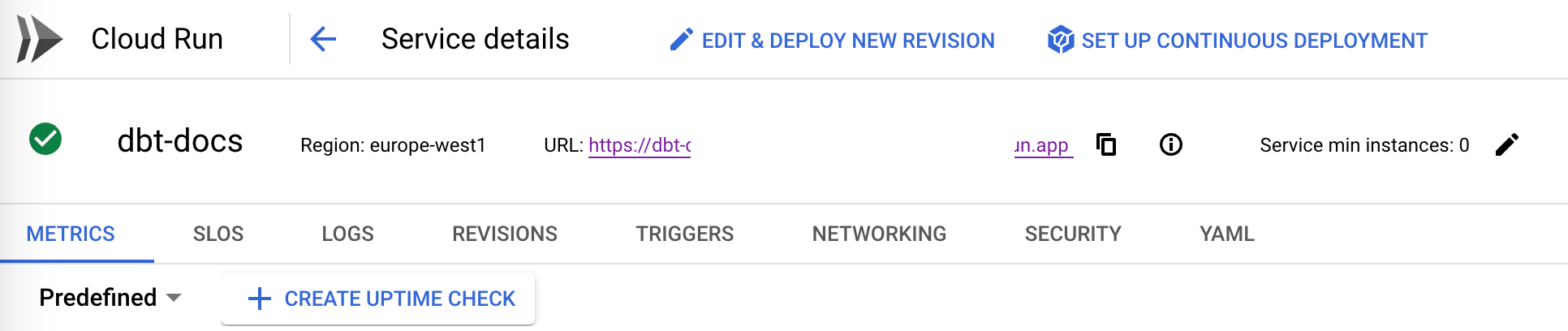

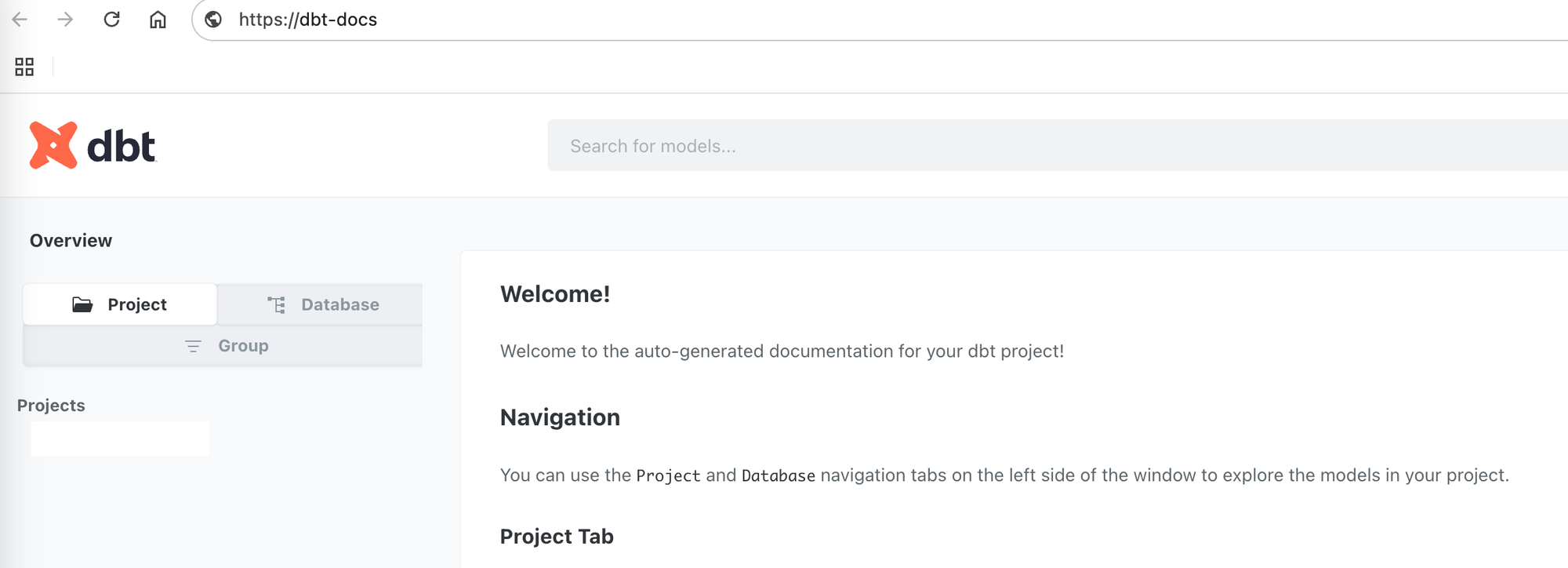

When you check the service in Cloud Run, you'll see a link where your dbt Docs are hosted. Clicking this link will give you access to all the documentation for your dbt project.

When you check your service in Cloud Run, you'll see a URL where your dbt documentation is hosted. Click on this link to access the complete documentation of your dbt project, where you'll find detailed information about your data models, transformations, and lineage graphs.

Conclusion

Following these steps, you can create an automated pipeline that builds and deploys your dbt documentation whenever your project is updated. This setup ensures your team always has access to the latest documentation, making it easier to maintain and understand your data models.

The combination of dbt Docs and Google Cloud Run provides a scalable, maintainable solution for hosting your data documentation. This approach not only makes your documentation easily accessible but also ensures it stays in sync with your codebase through automated deployments.

Thanks for reading.